OpenAI has made a landmark release by publishing its first open-weight GPT‑class models in over five years, introducing gpt‑oss‑20b and gpt‑oss‑120b, both under the permissive Apache 2.0 license.

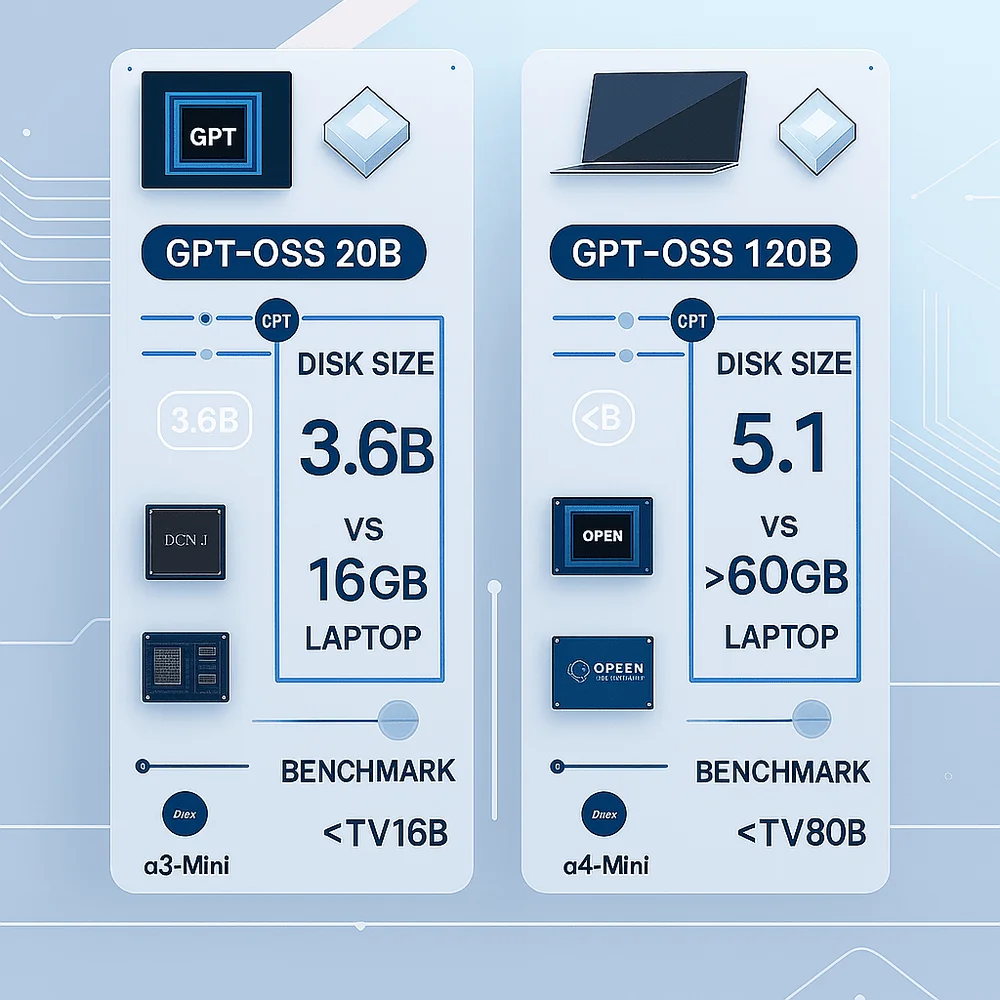

The 20B version is a mixture‑of‑experts (MoE) model with around 3.6 billion active parameters, offering performance comparable to OpenAI’s proprietary o3‑mini benchmarks. It can run efficiently on consumer hardware with as little as 16 GB of memory, making it highly suitable for local deployment on desktops or laptops.

In contrast, the 120B variant with about 117–120 billion parameters, of which around 5.1 billion are active, delivers near‑parity performance with the o4‑mini model on reasoning tasks. It runs on a single 80 GB GPU, offering enterprise‑grade inference at lower cost.

Key highlights:

- Open weight policy: Full usage, fine‑tuning, and redistribution rights under Apache 2.0.

- Agentic capabilities: Native support for function calling, structured outputs, web browsing, and Python execution workflows.

- Safety and trust: Rigorously evaluated against misuse, jailbreak resistance comparable to o4‑mini, though some limitations on instruction hierarchy and hallucination accuracy were noted.

- Democratizes access: Enables innovation without requiring massive infrastructure, ideal for regulated industries or privacy‑sensitive applications.

- Cloud integrations: Available on platforms like Hugging Face, LM Studio, AWS Bedrock, and SageMaker, combining flexibility with managed hosting options.

By open-sourcing its latest models, OpenAI signals a strategic shift that not only revives its original mission of making AI widely accessible but also responds to mounting competition from open-source frontrunners like Meta’s Llama and China’s DeepSeek. CEO Sam Altman described this initiative as a renewed pledge to democratize AI, offering greater customization flexibility while upholding strict safety standards.

Technical breakdown:

| Model | Active Parameters | Approx. Disk Size | Suitable Hardware | Benchmark Tier |

| gpt‑oss‑20b | ~3.6 B | ~14–16 GB | 16 GB GPU / Laptop | Comparable to o3‑mini |

| gpt‑oss‑120b | ~5.1 B | ~60 GB (quantized) | 80 GB GPU (e.g. H100 class) | Near o4‑mini |

Beyond broad accessibility, OpenAI’s rollout also aligns with commercial expansion: AWS integration via Amazon Bedrock and SageMaker JumpStart offer scalable access, with gpt‑oss‑120b touted as up to three times more cost-efficient than DeepSeek‑R1 and Gemini models.