When OpenAI released GPT-4o, one of the most exciting features was its 128,000-token context window. This is a massive upgrade compared to previous models like GPT-3.5 or even GPT-4, which had context limits of 8K or 32K tokens. But what does a 128K context window mean? And more importantly, how can you use it effectively in real-world scenarios?

What Is a Context Window in GPT-4o?

The context window defines the maximum amount of text a model can interpret, analyze, and remember within a single session. It includes not only the prompt you provide but also the complete response generated by the model during the same interaction. You can think of it as the model’s short-term memory system.

- 1 token = ~4 characters or ~0.75 words

- So 128,000 tokens = around 300 pages of text

That’s a lot of memory! This lets GPT-4o understand longer documents, bigger conversations, and more context without forgetting earlier parts.

Why a Large GPT-4o Context Window Matters?

In earlier models, if your input got too long, the model would lose track of earlier parts. That made it hard to:

- Analyze large documents

- Write long reports

- Continue coding projects with context

- Handle lengthy customer support chats

With GPT-4o’s 128K token window, you can now work on complex tasks without breaking them into small parts.

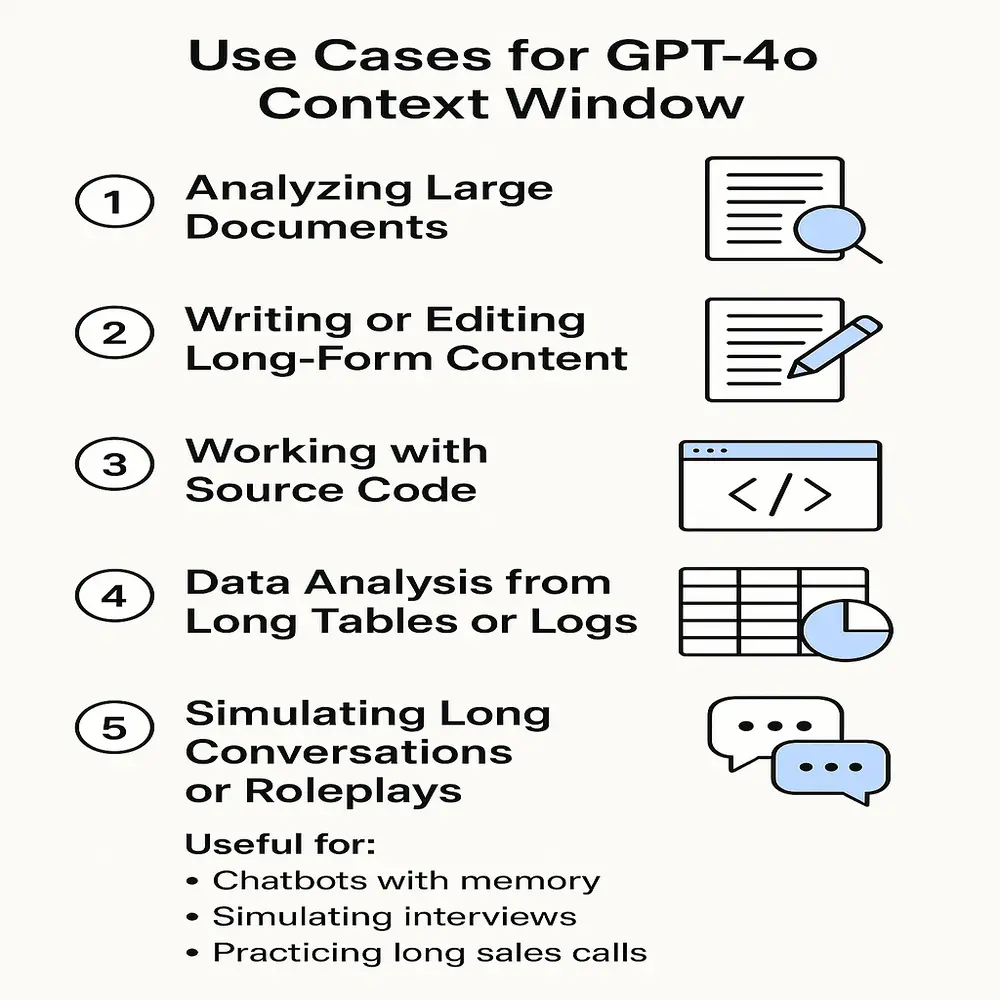

Best Use Cases

Here are the real-world ways to take advantage of the full window:

1. Analyzing Large Documents

You can input long research papers, legal contracts, books, or manuals, and ask the model to:

- Summarize the whole text

- Extract important facts

- Answer questions about the content

2. Writing or Editing Long-Form Content

Writers can use GPT-4 to:

- Generate chapters of a book

- Rewrite or edit full articles or scripts

- Maintain a consistent tone across thousands of words

3. Working with Source Code

Developers can:

- Feed the model large codebases

- Ask for debugging across multiple files

- Get summaries or explanations for entire projects

4. Data Analysis from Long Tables or Logs

If you feed GPT-4o a CSV or logs with thousands of rows:

- It can spot patterns

- Answer queries based on full data

- Identify errors or anomalies

5. Simulating Long Conversations or Roleplays

Useful for:

- Chatbots with memory

- Simulating interviews

- Practicing long sales calls

Tips to Use the Full Context Window Effectively

Just because the model can handle 128K tokens doesn’t mean it’s always smart to max it out. Here are some helpful tips:

- Be structured: Use headings, bullet points, and clear sections when feeding large content.

- Use references: Label different parts like “Part A”, “Chapter 1”, “Section X” so you can refer back easily.

- Ask focused questions: Instead of “Summarize this,” ask, “Summarize Chapter 2 with focus on key conflicts.”

- Avoid duplication: Don’t repeat the same content in different parts, as it wastes tokens.

- Trim fluff: Cut filler words or irrelevant lines. When your input is well-organized and structured, the model can generate responses that are significantly more precise and meaningful.

How to Input Large Data into GPT-4o?

If you’re using the OpenAI API:

- You can send up to 128K tokens directly

- Use system, user, and assistant roles to guide the model

- Always measure token size using OpenAI’s tokenizer tools

If you’re using ChatGPT (Pro version):

- Some platforms only allow ~35K tokens

- Use platforms like OpenAI Playground or API wrappers for full access

How Much Context Can You Use in ChatGPT?

Even though GPT-4o supports 128K in the backend, ChatGPT UI (even Pro) may not give access to all of it. Current state:

| Platform | Max Context |

| ChatGPT Free | ~8K tokens (GPT-3.5) |

| ChatGPT Pro (GPT-4o) | Often capped around 32K–35K |

| OpenAI Playground/API | Full 128K available |

| Azure OpenAI | Depends on the deployment tier |

To access the complete 128K token capacity, you’ll need to use the OpenAI API directly rather than the standard user interface.

Cost & Speed Considerations

Using 128K tokens isn’t cheap or fast:

Cost (as of June 2024):

- Input: ~$0.005–$0.01 per 1K tokens

- Output: Similar or slightly higher

- 128K tokens round trip could cost $1–$2 per request

Latency increases with token count. A 5K input responds in ~1–3 seconds, while 100 K+ can take 20+ seconds.

Tip: Break up tasks intelligently to save time and money.

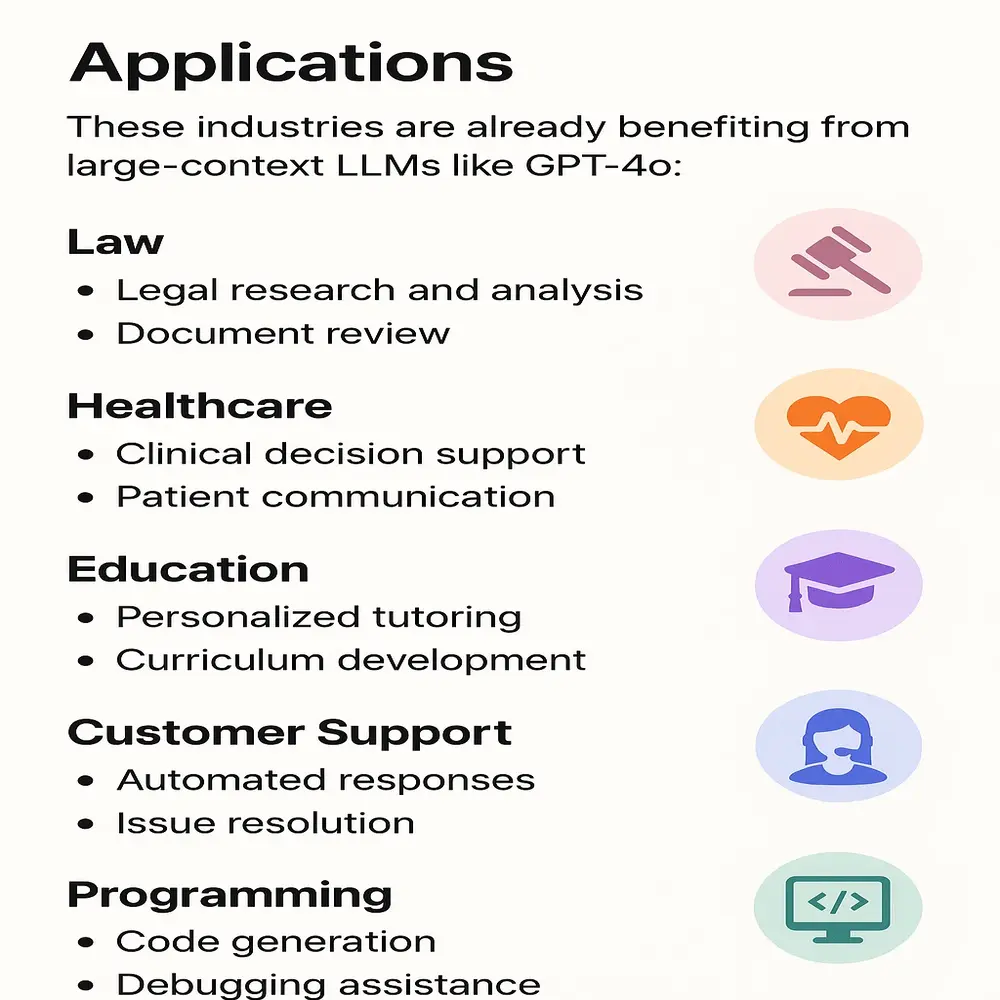

Real-World Industry Applications

These industries are already benefiting from large-context LLMs like GPT-4o:

- Law: Reviewing case files and contracts end-to-end

- Healthcare: Summarizing patient history from EHR systems

- Education: Creating personalized study guides from textbooks

- Customer Support: Long-term memory for better assistance

- Programming: Analyzing entire repositories or configuration systems

Limitations

Even though GPT-4o has a long memory, it’s not perfect.

- Performance may drop near the full 128K range

- It doesn’t truly “understand”: it predicts the next likely words based on input

- Latency (delay) increases with larger inputs

- Cost: Using the full context can get expensive on the API, especially with frequent calls

So use it wisely. Not all tasks need the full 128K tokens.

Multimodal Bonus: Text + Images + Audio

GPT-4o is multimodal, meaning:

- You can combine images, text, and audio into a single input

- It can analyze all of them in one conversation, using the full context window

For example:

- Upload a research paper, a voice memo, and an image chart

- Ask GPT-4o to summarize all of it together

This opens up powerful new use cases in marketing, education, and product development.

Future of Large GPT-40 Context Windows

AI is moving toward models that can remember millions of tokens. But right now, GPT-4o’s 128K is the sweet spot between speed, cost, and flexibility.

Shortly, expect:

- Smarter chunking and retrieval tools

- Hybrid memory (long-term + short-term)

- Lower latency with big windows

Final Thoughts

The 128K token context window in GPT-4o lets users work with large documents or data without losing important details. It’s useful for developers, writers, and researchers. To get the best results, use it only when needed, keep input organized, and ask context-based questions. Used wisely, it can save time and offer deeper AI support.