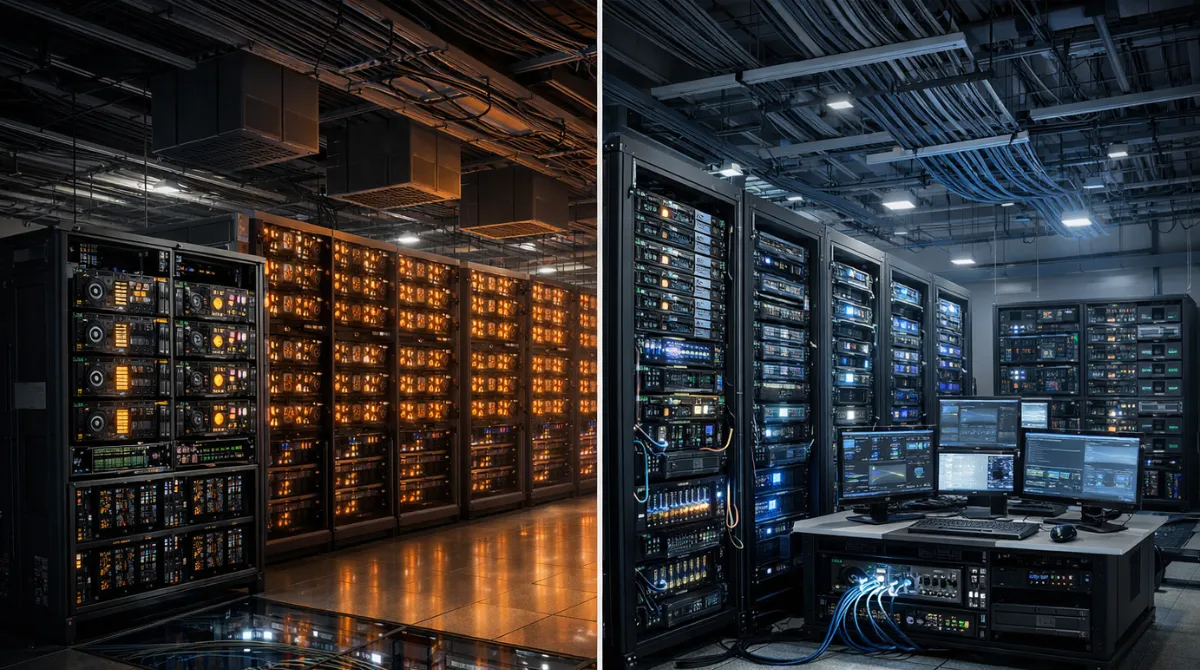

AI data centers often feature rows of GPUs and high-speed networks, but engineers design training and inference facilities to win very different performance battles. Both may run similar accelerators, yet the surrounding architecture, operations and economics diverge quickly once workload priorities are clear.

This guide breaks down what changes and why across compute design, networking, power, cooling, storage, reliability and deployment patterns. The goal is to make it easier to plan capacity, avoid bottlenecks and align infrastructure with model lifecycle needs.

What Are AI Training Data Centers?

AI training data centers are optimized to build and refine models by processing massive datasets through repeated iterations. They prioritize sustained throughput, high GPU utilization and efficient parallelism across many nodes.

Training runs tend to be long, expensive and sensitive to cluster-wide efficiency. Small percentage improvements in utilization or communication overhead can translate into significant cost and time savings.

What Are AI Inference Data Centers?

AI inference data centers are optimized to serve predictions from trained models to products, internal tools and automated workflows. They prioritize low latency, steady response under variable demand and predictable quality of service.

Inference is usually deployed closer to users or to critical systems to reduce round-trip delay. It also needs safer, simpler change management since model updates can directly affect customer experience and operational decisions.

Same GPUs Different Workload Priorities

Many organizations standardize on a small set of GPU platforms for procurement and operational simplicity. Even with the same accelerators, training is dominated by large-batch matrix math and frequent inter-GPU communication, while inference is dominated by request scheduling and tail latency control.

Training attempts to keep every GPU busy for hours or days with minimal interruption. Inference attempts to keep response time stable across traffic spikes, model variants and mixed precision settings.

- Training Priority. Maximize aggregate throughput and time to train, often measured by tokens per second or images per second at scale.

- Inference Priority. Minimize latency and cost per request while meeting service level objectives for p95 and p99 response times.

- Operational Priority. Training favors large scheduled jobs, inference favors continuous availability and safe rollouts.

These priorities influence every downstream design choice, from rack layouts to load balancing policies.

Compute Architecture Differences

Training clusters are typically built as large pools of tightly coupled GPU nodes. They benefit from uniform hardware, consistent driver stacks and high-bandwidth GPU-to-GPU connectivity for distributed data parallel and model parallel training. These tightly coupled training clusters closely follow the design principles of next-generation AI systems such as Nvidia’s Vera Rubin platform, which targets extreme-scale parallelism and high interconnect efficiency.

Inference clusters are often more heterogeneous because models vary in size, context length and batchability. They can mix GPU types, include CPU-heavy tiers for pre and post processing and rely on autoscaling to match demand.

Scheduling And Resource Allocation

Training uses batch schedulers that place big jobs across many GPUs while minimizing fragmentation. The scheduler is tuned for fairness, queue time and gang scheduling so that distributed jobs start with all needed resources.

Inference uses request-aware scheduling that considers model residency, KV cache behavior and token generation pacing. It often relies on warm pools and admission control to protect tail latency.

Precision And Memory Strategy

Training often uses mixed precision with optimizer state management and activation checkpointing to fit larger models. The design goal is to trade compute for memory when it increases overall throughput.

Inference focuses on memory efficiency for model weights and runtime caches, especially for large language models. Quantization, tensor parallelism and cache-aware batching are chosen to reduce cost without breaking latency targets.

Networking Requirements Throughput Vs Latency

Training is network intensive because each step requires synchronization across GPUs and nodes. High bisection bandwidth, predictable congestion control and low jitter matter because collective communication patterns can amplify small network issues.

Inference networks prioritize consistent low latency from edge to service tier and between microservices. East-west traffic can be significant, but it is dominated by many small flows rather than large all-reduce bursts.

- Training Fabric Focus. High throughput with strong support for collectives, often requiring careful topology planning and oversubscription control.

- Inference Fabric Focus. Low latency and fast failover with robust load balancing, service discovery and traffic shaping.

- Control Plane Focus. Training emphasizes job placement and telemetry, inference emphasizes rate limiting, canary routing and fault isolation.

Once the network objective is defined, the switch layer, cabling density and monitoring strategy fall into place more naturally.

Power And Cooling Design Differences

Training environments often run GPUs at high and steady utilization, pushing racks toward peak power draw for long periods. This favors power delivery sized for continuous loads, stable voltage and efficient distribution at high density.

Inference power draw can be bursty due to traffic patterns, autoscaling events and model routing changes. Facilities still need high density capability, but they benefit from strategies that handle rapid load changes without wasting capacity.

Thermal Profiles And Mechanical Layout

Training racks generate consistent heat, which allows teams to plan around fixed airflows or liquid cooling loops. Because hot spot risk depends on uniformity, layouts often use repeatable, symmetric rack designs.

Inference racks may have mixed node types and uneven utilization. Cooling design needs better tolerance for localized hot spots and must support maintenance without destabilizing nearby services.

Storage And Data Flow In Training Vs Inference

Training data flow is dominated by streaming large datasets, shuffling and checkpointing. Storage must deliver high sequential throughput and support many parallel readers without causing GPU stalls.

Inference data flow is dominated by loading model artifacts, serving embeddings and writing logs and traces. Latency to fetch model weights, tokenizer assets and feature data can be more important than raw throughput.

Checkpointing And Artifact Management

Training needs frequent checkpoints to limit restart cost and to enable experimentation across runs. That requires reliable, high-bandwidth writes and metadata operations that do not bottleneck large clusters.

Inference needs controlled artifact promotion, version pinning and fast rollback. Model registry integration and immutable artifacts help prevent configuration drift across regions and availability zones.

Reliability And Fault Tolerance Needs

Training workloads can tolerate some failures if the system can restart from checkpoints and reschedule quickly. The emphasis is on reducing the probability of job-wide interruption and lowering mean time to recovery when failures occur.

Inference workloads are usually customer facing or mission critical, so availability and graceful degradation are central. Design choices often include redundancy, health-based routing and capacity buffers to keep services within latency targets during faults.

- Training Resilience. Checkpoints, preemption-aware scheduling and fast node replacement reduce the cost of failures.

- Inference Resilience. Multi-zone deployment, circuit breakers and load shedding protect user experience.

- Operational Safety. Inference benefits from stricter change control, staged rollouts and observability tuned to real user impact.

Reliability planning becomes clearer when teams tie it directly to the business cost of downtime and the workload’s ability to recover.

Cost Optimization Strategies For Each Type

Training cost optimization centers on maximizing GPU hours converted into useful learning. That means reducing idle time, minimizing communication overhead and keeping the data pipeline fast enough to feed accelerators.

Inference cost optimization centers on cost per output while meeting service objectives. Efficiency comes from right sizing instances, improving batching and cache reuse and selecting precision and quantization levels that keep quality intact.

- Target The Dominant Bottleneck. Training bottlenecks often sit in the network and input pipeline, while inference bottlenecks often sit in memory and queueing.

- Right Size The Cluster Shape. Training benefits from larger uniform pods, inference benefits from multiple smaller pools aligned to model tiers and latency classes.

- Automate Utilization Controls. Training improves with queue policies and reserved capacity, inference improves with autoscaling and admission control.

- Measure What Matters. Training should track end-to-end throughput and job completion time, inference should track p95 latency, error rate and cost per request.

Cost work is most effective when teams connect infrastructure metrics directly to model quality, delivery timelines and user experience.

Where Training And Inference Data Centers Are Deployed?

Operators typically place training data centers where large power envelopes, high-density cooling and low-cost energy are available. Proximity to major network backbones still matters, but training is less sensitive to user distance.

Operators place inference data centers closer to users, applications and data sources to cut latency and reduce bandwidth costs. Many organizations combine centralized inference for batch use with regional inference for real-time services.

How Training And Inference Work Together In The AI Lifecycle?

Training produces model weights, evaluation reports and artifacts that inference systems serve at scale. The handoff is not a single event, because teams continually retrain, fine-tune and patch models as data shifts and requirements change.

Strong lifecycle design treats training and inference as connected systems with shared governance. That includes consistent telemetry, reproducible builds and clear promotion rules from experimental checkpoints to production releases.

- Data And Feedback Loops. Inference telemetry informs training data curation, safety tuning and regression testing.

- Model Version Control. Artifact immutability and compatibility checks reduce deployment risk.

- Capacity Planning. Training schedules and inference seasonality can compete for GPUs, so portfolio planning prevents sudden shortages.

When the lifecycle is cohesive, infrastructure decisions become less reactive and more aligned to product delivery.

Key Differences Between Training And Inference Data Centers

The simplest way to compare the two is to map their dominant constraints. Training depends on efficient distributed compute across many GPUs, while inference depends on low latency, high concurrency and stable service behavior.

| Design Area | Training Data Centers | Inference Data Centers |

|---|---|---|

| Primary Goal | Max throughput and time to train | Low latency and predictable service |

| Networking | High bisection bandwidth for collectives | Low latency routing and fast failover |

| Storage Pattern | High read throughput and frequent checkpoints | Fast model loading and consistent artifact rollout |

| Reliability Strategy | Checkpoint and restart, job recovery focus | Redundancy, graceful degradation, strict SLOs |

These differences explain why copying a training cluster design into production serving often produces disappointing results. They also explain why a latency-first inference stack can be inefficient for large-scale training.

Conclusion

Training and inference AI data centers may use the same GPUs, but engineers design them to achieve very different outcomes. Training rewards throughput, scalable parallelism and efficient data pipelines, while inference rewards low latency, reliability and careful cost per request control.

The best results come from designing each environment around its real bottlenecks, then connecting them through a disciplined AI lifecycle. With that approach, infrastructure becomes a multiplier for model quality and dependable delivery rather than a recurring constraint.