AI teams keep running into the same wall. Models grow faster than compute budgets, memory bandwidth and power delivery can comfortably follow. The Nvidia Vera Rubin Platform serves as a next-generation foundation for AI computing, improving performance, efficiency and scalability across training and inference.

This guide explains what the platform is, what makes it different, and how to think about its impact on infrastructure planning. It focuses on practical decision points like performance per watt, networking, memory and software compatibility.

What Is The Nvidia Vera Rubin Platform?

The Nvidia Vera Rubin Platform refers to a full-stack AI computing platform centered on a new GPU architecture and the surrounding system building blocks. That usually includes the accelerator, CPU pairing, high-speed interconnects, memory subsystem and software stack used to deploy AI workloads at scale.

This full-stack approach helps explain why Nvidia GPUs dominate AI workloads, as tight CUDA optimization and hardware-software integration consistently translate raw compute into real performance, a dynamic explored in why Nvidia GPUs dominate AI workloads.

Rather than being a single chip, it is best viewed as an integrated platform designed for modern AI pipelines. Those pipelines include large-scale pretraining, post-training alignment, multi-modal workloads, retrieval-augmented generation and latency-sensitive inference.

At the platform level, the goal is to reduce bottlenecks that show up when thousands of GPUs work on one job. That means improving compute throughput, feeding the cores with enough memory bandwidth and keeping GPU-to-GPU communication efficient under heavy load.

Key Features Of The Vera Rubin Platform

Vera Rubin is expected to prioritize balanced system design. Raw compute matters, but real AI performance depends on memory, interconnect and software efficiency working together.

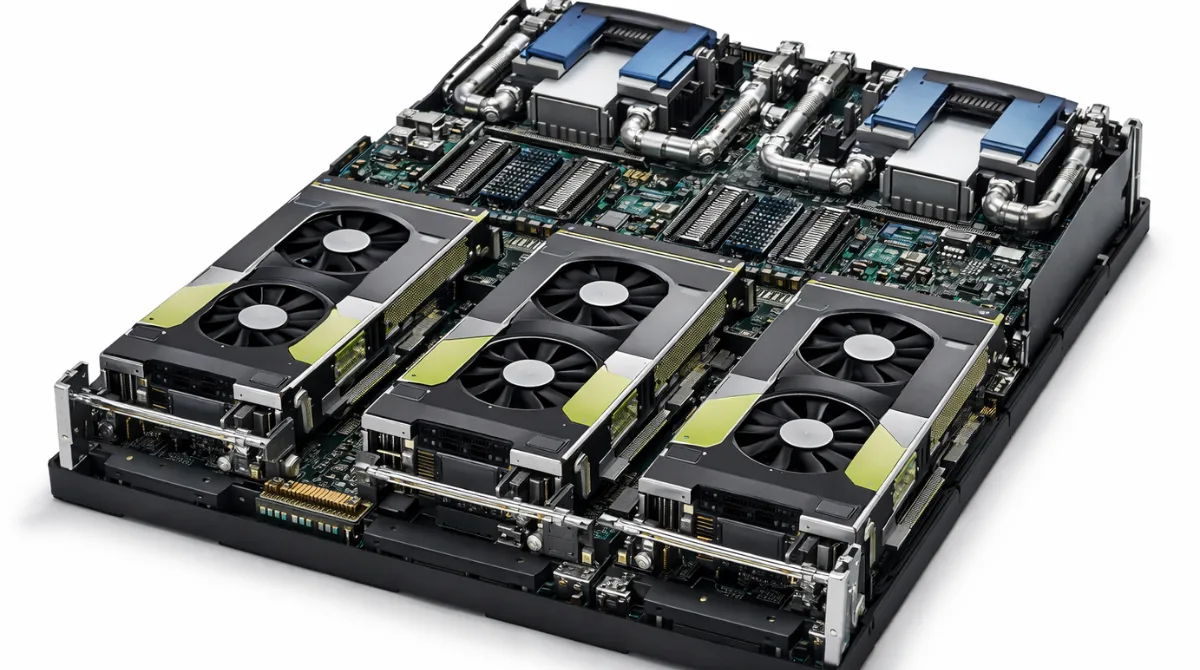

- Higher AI compute density. More usable throughput per accelerator helps shorten training cycles and increase batch sizes without stalling.

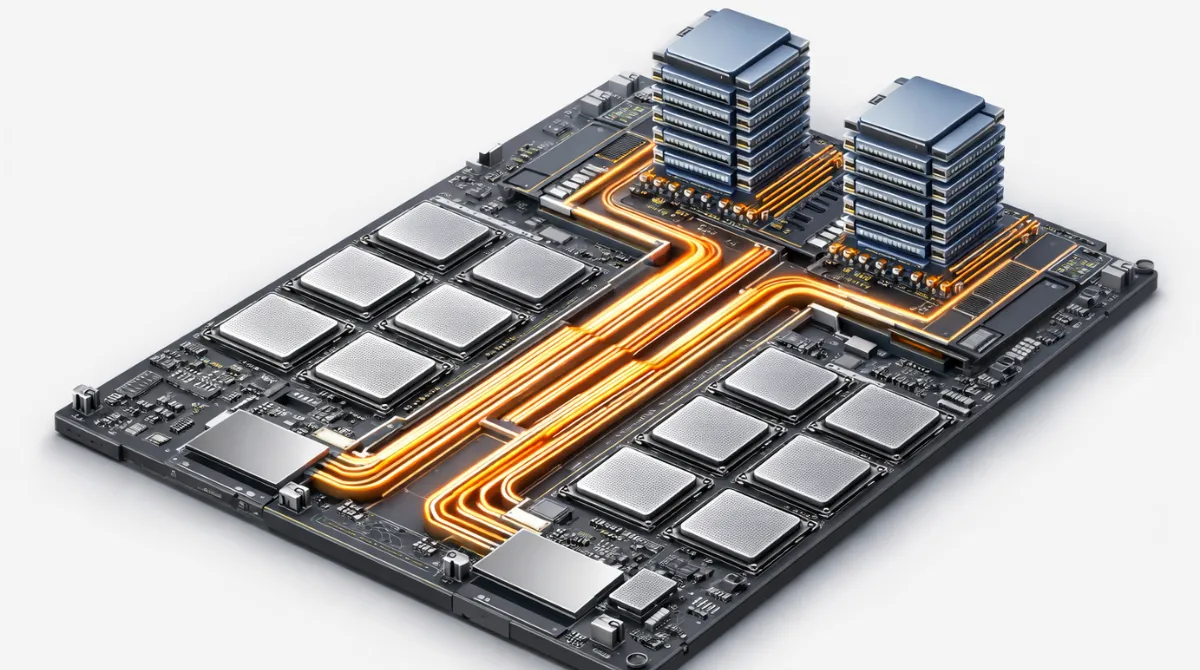

- Stronger memory and bandwidth alignment. Bigger models and longer context windows increase pressure on memory capacity, bandwidth and data movement efficiency.

- Faster scale-up and scale-out fabric. High-speed GPU interconnects and networking reduce synchronization overhead and keep large clusters productive.

- Improved efficiency per watt. Data center limits are often power and cooling, so performance gains must arrive with better energy efficiency.

- Platform-level software integration. Compilers, libraries, kernels and orchestration tools determine whether theoretical performance becomes real throughput.

These features matter most when workloads are communication-heavy and memory-bound. Many transformer and multi-modal workloads fall into that category once they scale.

How Vera Rubin Improves AI Performance?

AI performance improvements usually come from three places. They include faster math for common data types, reduced data movement costs and better parallelism across many accelerators.

On the compute side, modern accelerators rely on specialized tensor math and mixed-precision pathways. Better support for training-friendly and inference-friendly numeric formats can increase throughput while maintaining model quality.

Memory is where many systems fall behind. When attention, KV cache growth, and activation storage surge, memory bandwidth and capacity become decisive. Platform improvements that keep data closer to compute and move it more efficiently can lift end-to-end throughput even without dramatic changes to peak FLOPS.

Scaling is the third lever. Large jobs depend on collective operations, gradient synchronization and pipeline coordination. Faster interconnect and better communication scheduling reduce idle time, which raises effective utilization and improves time to train.

Performance Drivers That Matter Most In Practice

- End-to-end utilization. High utilization means less waiting on memory, networking, or kernel launch overhead.

- Token throughput. Inference performance is best judged by tokens per second at a given quality and latency target.

- Time to accuracy. Training success is measured by time to reach a quality threshold, not peak specifications.

- Stability under load. Predictable performance at scale reduces rework, job restarts, and wasted compute.

These drivers tie directly to operating cost. Better efficiency reduces both power draw and the number of accelerators needed for a target service level.

Vera Rubin Vs Previous Nvidia AI Platforms

Comparisons across Nvidia generations typically show a pattern. Each new platform aims to advance tensor throughput, memory capabilities and interconnect bandwidth while improving software support for the latest model architectures.

Previous Nvidia AI platforms delivered major leaps in mixed precision, transformer efficiency and cluster-scale networking. Vera Rubin is expected to extend that trajectory with a tighter platform view that supports larger models, longer context and more concurrent inference.

| Decision Factor | Earlier Nvidia AI Platforms | Vera Rubin Platform Direction |

|---|---|---|

| Training Throughput | Strong gains from tensor cores and mixed precision | Higher usable throughput with better balance across compute and memory |

| Memory And Bandwidth | Improved HBM and cache strategies over time | Further emphasis on feeding larger models and longer sequences efficiently |

| Scaling Efficiency | High performance within nodes and across clusters | Lower communication overhead and higher effective utilization at scale |

| Inference Economics | Better kernels and quantization support in newer stacks | More throughput per watt and stronger multi-tenant serving characteristics |

This type of comparison helps with planning. Teams should focus on where their bottleneck sits today and whether platform changes target that specific constraint.

What To Evaluate When Comparing Platforms?

Peak accelerator specs rarely predict real cluster results. A better approach is to map the platform to your workload profile and constraints.

- Model mix. LLM pretraining, fine-tuning, vision transformers and recommendation workloads stress different parts of the system.

- Parallelism strategy. Tensor, pipeline and expert parallelism behave differently under networking and memory pressure.

- Serving shape. Long context, streaming and tool-using agents can shift inference bottlenecks from compute to memory and bandwidth.

- Operational limits. Power, rack density and cooling can determine what performance is actually deployable.

Once the workload characteristics are clear, benchmarking can be more targeted. That reduces the chance of overbuying for peak performance that never materializes in production.

Who The Nvidia Vera Rubin Platform Is For?

The platform is most relevant for organizations running AI at data center scale. That includes both frontier training and high-volume inference, especially where reliability and total cost of ownership matter as much as raw speed.

It is also relevant for teams modernizing their AI infrastructure. Migrating from fragmented stacks toward a more unified platform can simplify operations, improve reproducibility, and reduce time spent tuning kernels and communication settings.

Best-Fit Workloads And Environments

- Large model training. Workloads that scale across many accelerators and spend significant time in collective operations.

- Enterprise inference at scale. Multi-tenant serving with strict latency targets and high throughput needs.

- Multi-modal pipelines. Combined text, image, and audio processing that increases memory and bandwidth pressure.

- Research clusters. Environments that need flexible experimentation with new architectures and training recipes.

Fit is ultimately defined by constraints. If your system is power-limited, network-limited or memory-limited, a platform shift can deliver a disproportionate benefit compared to a simple accelerator refresh.

What Vera Rubin Means For The Future Of AI Computing?

The direction suggested by Vera Rubin points toward AI systems that behave more like purpose-built factories. The core goal is to turn more of the installed hardware into useful model progress, measured in tokens trained or served per unit cost.

That future also raises the importance of software and orchestration. As clusters grow, scheduling, fault tolerance and observability become performance features, not optional tools.

Efficiency will keep becoming a central requirement. Better performance per watt supports higher rack density, lowers operating cost and reduces the friction of expanding capacity.

Infrastructure Shifts To Prepare For

- Higher network expectations. Faster GPUs require matching upgrades in fabric design and topology to avoid communication bottlenecks.

- More attention to memory planning. Context growth and KV cache behavior make memory a first-class design variable.

- Greater reliance on optimized libraries. Kernel quality and compiler maturity directly influence cost per token.

- Stronger platform standardization. Standard stacks simplify deployment across training and inference environments.

Planning around these shifts helps avoid partial upgrades that create new bottlenecks. A platform approach tends to deliver the most value when compute, networking, and software are upgraded together.

Conclusion

The Nvidia Vera Rubin Platform is best understood as a next-generation AI computing platform that aims to improve real-world throughput, scaling efficiency and operating economics. Its value will show up most clearly in workloads limited by memory bandwidth, interconnect performance and cluster-level utilization.

For infrastructure teams, the key is to evaluate the full system path from data movement to communication to serving behavior. When those constraints are matched to the platform strengths, upgrades become measurable in time to train, cost per token and overall data center efficiency.